Edge computing architecture and use cases

Written by By Jason Gonzalez, Jason Hunt, Mathews Thomas, Ryan Anderson, Utpal Mangla with LF Edge Premier Member Company IBM

This blog originally ran on the IBM website. For more articles from IBM, visit https://developer.ibm.com/articles/.

Developing new services that take advantage of emerging technologies like edge computing and 5G will help generate more revenue for many businesses today, but especially for telecommunications and media companies. IBM works with many telecommunications companies to help explore these new technologies so that they can better understand how the technologies are relevant to current and future business challenges.

The good news is that edge computing is based on an evolution of an ecosystem of trusted technologies. In this article, we will explain what edge computing is, describe relevant use cases for the telecommunications and media industry while describing the benefits for other industries, and finally present what an end-to-end architecture that incorporates edge computing can look like.

What is Edge Computing?

Edge computing is composed of technologies take advantage of computing resources that are available outside of traditional and cloud data centers such that the workload is placed closer to where data is created and such that actions can then be taken in response to an analysis of that data. By harnessing and managing the compute power that is available on remote premises, such as factories, retail stores, warehouses, hotels, distribution centers, or vehicles, developers can create applications that:

- Substantially reduce latencies

- Lower demands on network bandwidth

- Increase privacy of sensitive information

- Enable operations even when networks are disrupted

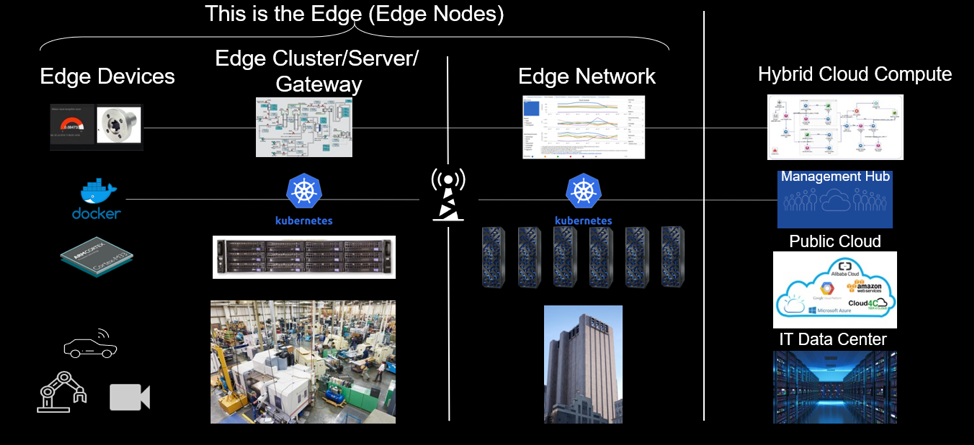

To move the application workload out to the edge, multiple edge nodes might be needed, as shown in Figure 1.

Figure 1. Examples of edge nodes

These are some of the key components that form the edge ecosystem:

- Cloud

This can be a public or private cloud, which can be a repository for the container-based workloads like applications and machine learning models. These clouds also host and run the applications that are used to orchestrate and manage the different edge nodes. Workloads on the edge, both local and device workloads, will interact with workloads on these clouds. The cloud can also be a source and destination for any data that is required by the other nodes. - Edge device

An edge device is a special-purpose piece of equipment that also has compute capacity that is integrated into that device. Interesting work can be performed on edge devices, such as an assembly machine on a factory floor, an ATM, an intelligent camera, or an automobile. Often driven by economic considerations, an edge device typically has limited compute resources. It is common to find edge devices that have ARM or x86 class CPUs with 1 or 2 cores, 128 MB of memory, and perhaps 1 GB of local persistent storage. Although edge devices can be more powerful, they are the exception rather than the norm currently. - Edge node

An edge node is a generic way of referring to any edge device, edge server, or edge gateway on which edge computing can be performed. - Edge cluster/server

An edge cluster/server is a general-purpose IT computer that is located in a remote operations facility such as a factory, retail store, hotel, distribution center, or bank. An edge cluster/server is typically constructed with an industrial PC or racked computer form factor. It is common to find edge servers with 8, 16, or more cores of compute capacity, 16GB of memory, and hundreds of GBs of local storage. An edge cluster/server is typically used to run enterprise application workloads and shared services. - Edge gateway An edge gateway is typically an edge cluster/server which, in addition to being able to host enterprise application workloads and shared services, also has services that perform network functions such as protocol translation, network termination, tunneling, firewall protection, or wireless connection. Although some edge devices can serve as a limited gateway or host network functions, edge gateways are more often separate from edge devices.

IoT sensors are fixed function equipment that collects and transmits data to an edge/cloud but does not have onboard compute, memory, and storage. Containers cannot be deployed on them for this reason. These edge devices connect to the different nodes but are not reflected in Figure 1 as they are fixed-function equipment.

With a basic understanding of edge computing, let’s take a brief moment to discuss 5G and its impact on edge computing before we discuss the benefits and challenges around edge computing.

Edge Computing and 5G

The advent of 5G has made edge computing even more compelling, enabling significantly improved network capacity, lower latency, higher speeds, and increased efficiency. 5G promises data speeds in excess of 20 Gbps and the ability to connect over a million devices per square kilometer.

Communications service providers (CSPs) can use edge computing and 5G to be able to route user traffic to the lowest latency edge nodes in a much more secure and efficient manner. With 5G, CSPs can also cater to real-time communications for next-generation applications like autonomous vehicles, drones, or remote patient monitoring. Data intensive applications that require large amounts of data to be uploaded to the cloud can run more effectively by using a combination of 5G and edge computing.

In the advent of 5G and edge computing, developers will need to continue to focus on making native cloud applications even more efficient. The continual addition of newer and smaller edge devices will require changes to existing applications so that enterprises can fully leverage the capabilities of 5G and edge computing. In some cases, applications will need to be containerized and run on a very small device. In other cases, the virtualized network components need to be redesigned to take full advantage of the 5G network. Many other cases exist that require evaluation as part of the application development roadmap and future state architecture.

Benefits and challenges of edge computing

As emerging technologies, 5G and edge computing bring many benefits to many industries, but they also bring some challenges along with them.

Core benefits

The benefits of edge computing technology include these core benefits:

- Performance: Near instant compute and analytics at the edge lowers latency, and therefore greatly increasing performance. With the advent of 5G, it is possible to rapidly communicate with the edge, and applications that are running at the edge can quickly respond to the ever-growing demand of consumers.

- Availability: Critical systems need to operate irrespective of connectivity. There are many potential points of failure with the current communications flow. These points of failure include the company’s core network, multiple hops across multiple network nodes with security risks along the network paths, and many others. With edge, with the communication primarily between the consumer/requestor and the device/local edge node, there is a resulting increase in availability of the systems.

- Data security: In edge computing architectures, the analytics data potentially never leaves the physical area where it is gathered and is used within the local edge. Only the edge nodes need to be primarily secured, which makes it easier to manage and monitor, which means that the data is more secure.

Additional benefits, with additional challenges

These additional benefits do not come without additional challenges, such as:

- Managing at scale

- Making workloads portable

- Security

- Emerging technologies and standards

Managing at scale

As workload locations shift when you incorporate edge computing, and the deployment of applications and analytics capabilities occur in a more distributed fashion, the ability to manage this change in an architecturally consistent way requires the use of orchestration and automation tools in order to scale.

For example, if the application is moved from one data center with always available support to 100’s of locations at the local edge that are not readily accessible or not in a location with that kind of local technical support, how one manages the lifecycle and support of the application must change.

To address this challenge, new tools and training for technical support teams will be needed to manage, orchestrate, and automate this new complex environment. Teams will require more than the traditional network operations tools (and even beyond software-defined network operations tools), as support teams will need tools to help manage application workloads in context to the network in various distributed environments (many more than previously), which will each have differing strengths and capabilities. In future articles in this series, we will look at these application and network tools in more details.

Making workloads portable

To operate at scale, the workloads being considered for edge computing localization need to be modified to be more portable.

- First, size does matter. Since reducing latency requires moving the workload closer to the edge and moving it to the edge components will mean less compute resources to run the workload, the overall size of various workloads might limit the potential of edge computing.

- Second, adopting a portability standard or set of standards can be difficult with many varied workloads to consider. Also, the standard should allow for management of the full lifecycle of the application, from build through run and maintain.

- Third, work will need to be done on how best to break up workloads into sub-components to take advantage of the distributed architecture of edge computing. This would allow for the running of certain parts of workloads to run on an edge device with others running on an edge cluster/server or any other distribution across edge components. In a CSP, this would typically include migrating to a combination of network function virtualization (for network workloads) and container workloads (for application workloads and in the future, network workloads), where applicable and possible. Depending on the current application environment, this move might be a large effort.

To address this challenge in a reasonable way, workloads can be prioritized based on a number of factors, including benefit of migration, complexity, and resource/time to migrate. Many network and application partners are already working on migrating capabilities to container-based approaches, which can aid in addressing this challenge.

Security

While Data Security is a benefit in that the data can be limited to certain physical locations for specific applications, overall security is an additional challenge when adopting edge computing. Device edge physical devices might not have the ability to leverage existing security standards or solutions due to their limited capabilities.

So, to address these security challenges, the infrastructure upstream in the local edge might have additional security concerns to address. In addition, with multiple device edges, the security is now distributed and more complex to handle.

Emerging technologies and standards

With many standards in this ecosystem newly created or quickly evolving, it will be difficult to maintain technology decisions long term. The decision of a specific edge device or technology might be superseded by the next competing device, making it a challenging environment to operate in. With the right tools in place to address management of these varied workloads along with their entire application lifecycle, it can be an easier task to introduce new devices or capabilities or replace existing devices as the technologies and standards evolve.

Cross Industry Use Cases

In any complex environment, there are many challenges that occur and many ways to address them. The evaluation of whether a business problem or use case could or should be solved with edge computing will need to be done on a case by case basis to determine whether it makes sense to pursue.

For reference, let’s discuss some potential industry use cases for consideration, both examples and real solutions. A common theme across all these industries is the network that will be provided by the CSP. The devices with applications need to operate within the network and the advent of 5G makes it even more compelling for these industries to start seriously considering edge computing.

Use case #1: Video Surveillance

Using video to identify key events is rapidly spreading across all domains and industries. Transmitting all the data to the cloud or data center is expensive and slow. Edge computing nodes that consist of smart cameras can do the initial level of analytics, including recognizing entities of interest. The footage of interest can then be transmitted to a local edge for further analysis and respond appropriated to footage of interest including raising alerts. Content that cannot be handled at the local edge can be sent to the cloud or data center for in-depth analysis.

Consider this example: A major fire occurs, and it is often difficult to differentiate humans from other objects burning. In addition, the network can become heavily loaded in such instances. With edge computing, cameras that are located close to the event can determine whether a human is caught in the fire by identifying characteristics typical of a human being and clothing that humans might normally wear which might survive the fire. As soon as the camera recognizes a human in the video content, it will start transmitting the video to the local edge. Hence, the network load is reduced as the transmission only happens when the human is recognized. In addition, the local edge is close to the device edge so latency will be almost zero. Lastly, the local edge can now contact the appropriate authorities instead of transmitting the data to the data center which will be slower and since the network from the fire site to the data center might be down.

Figure 2. Depiction of intelligent video example

Use case #2: Smart Cities

Operating and governing cities has become a challenging mission due to many interrelated issues such as increasing operation cost from aging infrastructure, operational inefficiencies, and increasing expectations from a city’s citizens. Advancement of many technologies like IoT, edge computing, and mobile connectivity has helped smart city solutions to gain popularity and acceptance among a city’s citizens and governance alike.

IoT devices are the basic building blocks of any smart city solution. Embedding these devices into the city’s infrastructure and assets helps monitor infrastructure performance and provides insightful information about the behavior of these assets. Due to the necessity to provide real-time decision and avoid transmitting a large amount of sensor data, edge computing becomes a necessary technology to deliver city’s mission critical solutions such as traffic, flood, security and safety, and critical infrastructure monitoring.

Use case #3: Connected Cars

Connected cars can gather data from various sensors within the vehicle including user behavior. The initial analysis and compute of the data can be executed within the vehicle. Relevant information can be sent to the base station that then transmits the data to the relevant endpoint, which might be a content delivery network in the case of a video transmission or automobiles manufacturers data center. The manufacturer might also have relationship with the CSP in which case the compute node might be at the base station owned by the CSP.

Use case #4: Manufacturing

Rapid response to manufacturing processes is essential to reduce product defects and improve efficiencies. Analytic algorithms monitor how well each piece of equipment is running and adjust the operating parameters to improve its efficiency. Analytic algorithms also detect and predict when a failure is likely to occur so that maintenance can be scheduled on the equipment between runs. Different edge devices are capable of differing levels of processing and relevant information can be sent to multiple edges including the cloud.

Consider this example: A manufacturer of electric bicycles is trying to reduce downtime. There are over 3,000 pieces of equipment on the factory floor including presses, assembly machines, paint robots and conveyers. An outage in a production run costs you $250,000 per hour. Operating at peak efficiency and with no unplanned outages is the difference between having profit and not having profit. To operate smoothly, the factory needs the following to run at the edge:

- Analytic algorithms that can monitor how well each piece of equipment is running and then adjust the operating parameters to improve efficiency.

- Analytic algorithms that can detect and predict when a failure is likely to occur so that maintenance can be scheduled on the equipment between runs.

Predicting failure can be complex and requires the customized models for each use case. For one example how these types of models can be created, refer to this code pattern, “Create predictive maintenance models to detect equipment breakdown risks.” Some of these models need to run on the edge, and our next set of tutorials will explain how to do this.

Figure 3. Depiction of manufacturing example

Overall Architecture

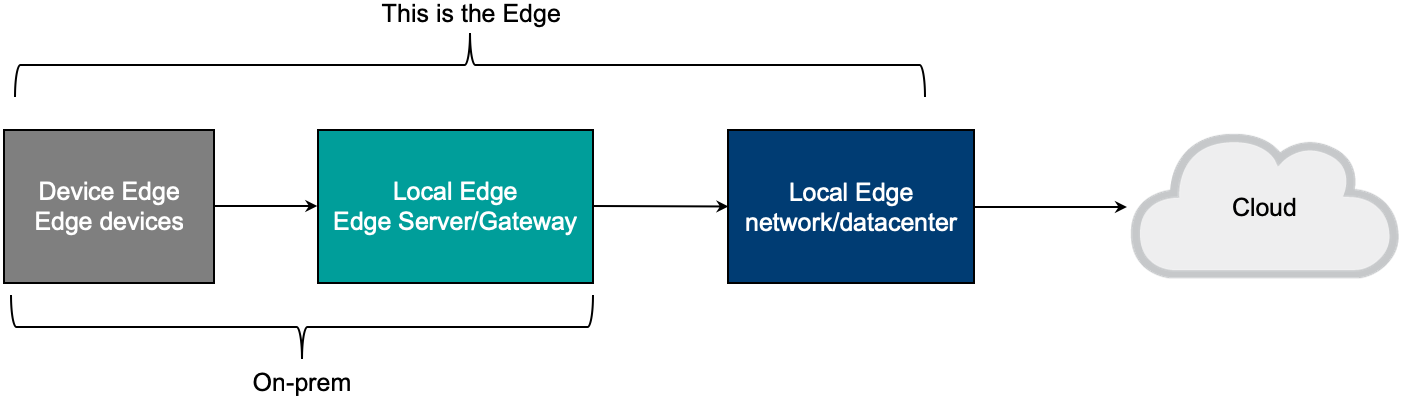

As we discussed earlier, edge computing consists of three main nodes:

- Device edge, where the edge devices sit

- Local edge, which includes both the infrastructure to support the application and also the network workloads

- Cloud, or the nexus of your environment, where everything comes together that needs to come together

Figure 4 represents an architecture overview of these details with the local edge broken out to represent the workloads.

Figure 4. Edge computing architecture overview

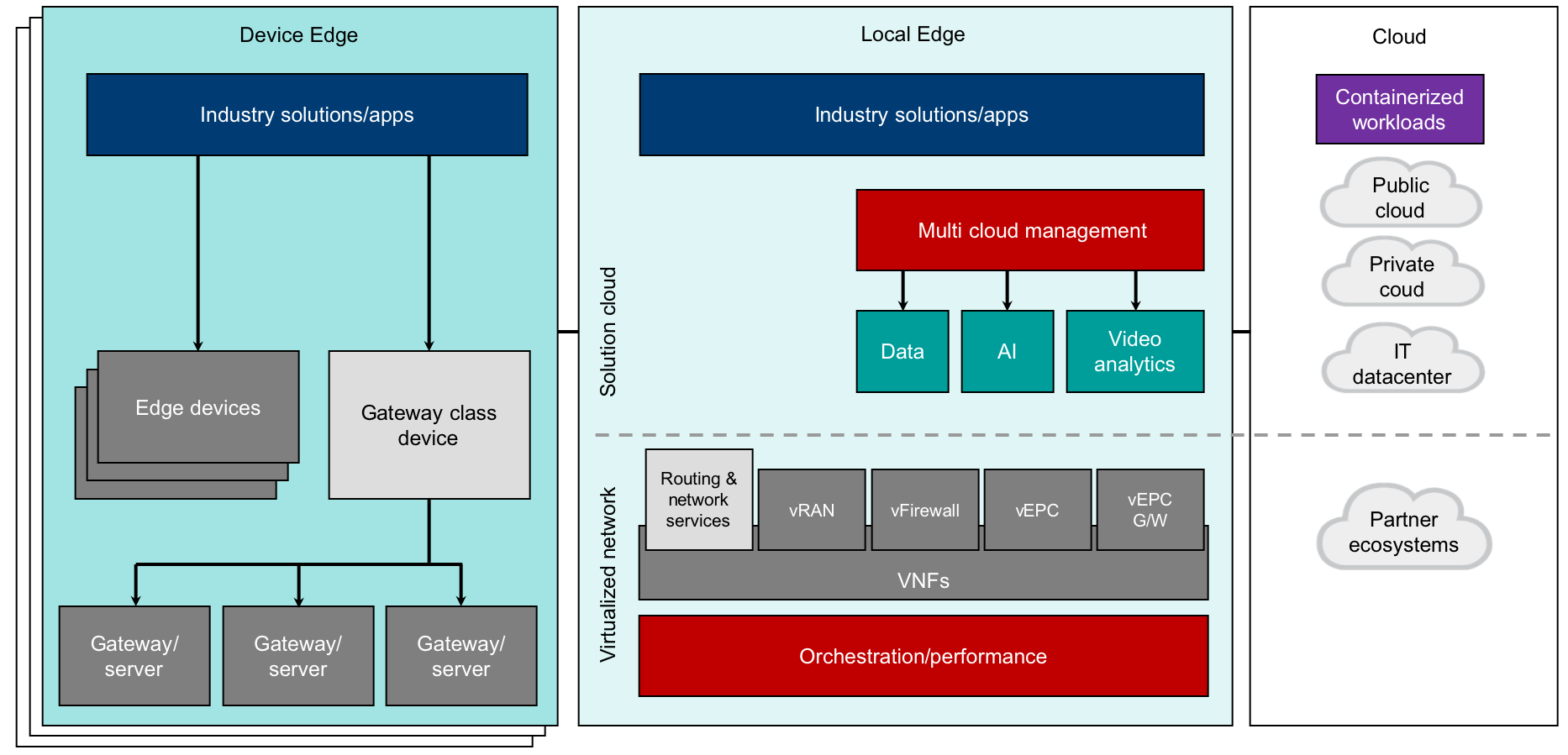

Each of these nodes is an important part of the overall edge computing architecture.

- Device Edge The actual devices running on-premises at the edge such as cameras, sensors, and other physical devices that gather data or interact with edge data. Simple edge devices gather or transmit data, or both. The more complex edge devices have processing power to do additional activities. In either case, it is important to be able to deploy and manage the applications on these edge devices. Examples of such applications include specialized video analytics, deep learning AI models, and simple real time processing applications. IBM’s approach (in its IBM Edge Computing solutions) is to deploy and manage containerized applications on these edge devices.

- Local Edge

The systems running on-premises or at the edge of the network. The edge network layer and edge cluster/servers can be separate physical or virtual servers existing in various physical locations or they can be combined in a hyperconverged system. There are two primary sublayers to this architecture layer. Both the components of the systems that are required to manage these applications in these architecture layers as well as the applications on the device edge will reside here.- Application layer: Applications that cannot run at the device edge because the footprint is too large for the device will run here. Example applications include complex video analytics and IoT processing.

- Network layer: Physical network devices will generally not be deployed due to the complexity of managing them. The entire network layer is mostly virtualized or containerized. Examples include routers, switches, or any other network components that are required to run the local edge.

- Cloud

This architecture layer is generically referred to as the cloud but it can run on-premise or in the public cloud. This architecture layer is the source for workloads, which are applications that need to handle the processing that is not possible at the other edge nodes and the management layers. Workloads include application and network workloads that are to be deployed to the different edge nodes by using the appropriate orchestration layers.

Figure 5 illustrates a more detailed architecture that shows which components are relevant within each edge node. Duplicate components such as Industry Solutions/Apps exist in multiple nodes as certain workloads might be more suited to either the device edge or the local edge and other workloads might be dynamically moved between nodes under certain circumstances, either manually controlled or with automation in place. It is important to recognize the importance of managing workloads in discreet ways as the less discreet, the more limited in how we might deploy and manage them.

While a focus of this article has been on application and analytics workloads, it should also be noted that network function is a key set of capabilities that should be incorporated into any edge strategy and thus our edge architecture. The adoption of tools should also take into consideration the need to handle application and network workloads in tandem.

Figure 5. Edge computing architecture overview – Detailed

We will be exploring every aspect of this architecture in more detail in upcoming articles. However, for now, let’s take a brief look at one real implementation of a complex edge computing architecture.

Sample implementation of an edge computing architecture

In 2019, IBM partnered with Telecommunications companies and other technology participants to build a Business Operation System solution. The focus of the project was to allow CSPs to manage and deliver multiple high-value products and services so that they can be delivered to market more quickly and efficiently, including capabilities around 5G.

Edge computing is a part of the overall architecture as it was necessary to provide key services at the edge. The following illustrates the implementation with further extensions having since been made. The numbers below refer to the numbers in Figure 6:

- A B2B customer goes to portal and orders a service around video analytics using drones.

- The appropriate containers are deployed to the different edge nodes. These containers include visual analytics applications and network layer to manage the underlying network functionality required for the new service.

- The service is provisioned, and drones start capturing the video.

- Initial video processing is done by the drones and the device edge.

- When an item of interest is detected, it is sent to the local edge for further processing.

- At some point in time, it is determined that a new model needs to be deployed to the edge device as new unexpected features begin to appear in the video so a new model is deployed.

Figure 6. Edge computing architecture overview – TM Forum Catalyst Example

As we continue to explore edge computing in upcoming articles, we will focus more and more on the details around edge computing, but let’s remember that edge computing plays a key role as part of a strategy and architecture, an important part, but only one part.

Conclusion

In this brief overview of edge computing technology, we’ve shown how edge computing is relevant to challenges faced by many industries, but especially the telecommunications industry.

The edge computing architecture identifies the key layers of the edge: the device edge (which includes edge devices), the local edge (which includes the application and network layer), and the cloud edge. But we’re just getting started. Our next article in this series will dive deeper into the different layers and tools that developers need to implement an edge computing architecture.